CryoEM Computer Vision Models

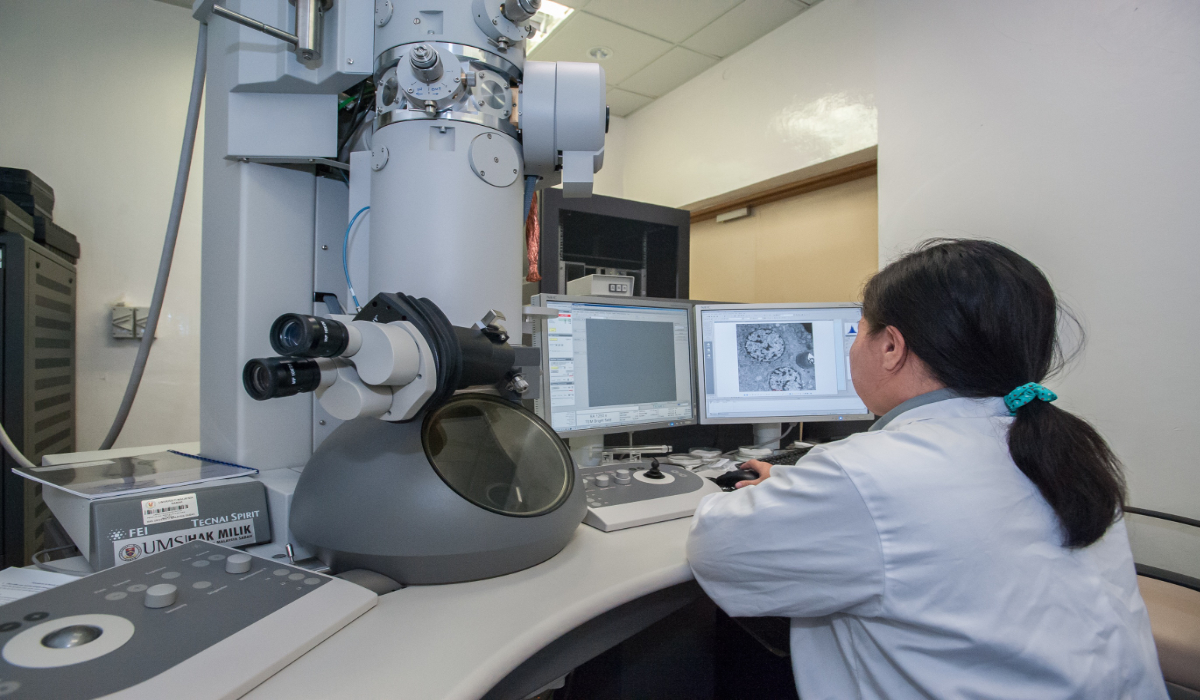

The Problem

Electron microscopy data collection requires highly trained experts to manually identify suitable imaging locations on CryoEM grids. This manual process creates significant bottlenecks: qualified microscopists require years of specialized training and are in extremely short supply, while the manual identification process takes hours per grid.

Transfer Learning Implementation

Implemented transfer learning with EfficientNet as the base model to detect suitable imaging locations on CryoEM grids.

The model detects squares (low resolution images) and holes (medium resolution images) where ice thickness is usable, while identifying and avoiding areas with grid contamination. This automated approach reproduces the expert selection process, with the advantages of examining more potential regions than manual inspection allows and enabling unattended microscope operation. Identification time was reduced from hours to minutes.

Technical Implementation

Training dataset: ~2,000 images with bounding boxes around target regions, collected from researchers and during on-site testing sessions. Most development work used simulation software since microscope access was limited to one day per month.

Data augmentation: Utilized the Albumentations library for flipping, rotation, hue adjustment, and contrast changes to expand the effective training dataset

Model evaluation: mAP (mean Average Precision) and IoU (Intersection over Union) metrics, with acceptance thresholds determined by team consensus

Cross-platform deployment: The model needed to work with two different microscope control systems (SerialEM and Latitude). For Latitude compatibility, I experimentally determined the necessary hue and contrast adjustments to normalize images for the model.

Validation approach: Tested model predictions using simulation software with manual evaluation. Since no gold standard dataset existed for this application, validation focused on whether the model selected reasonable targets while avoiding unsuitable regions.

Semi-Supervised Annotation Pipeline

I designed a semi-supervised annotation system to continuously improve model performance. The pipeline worked as follows:

- Feed unlabeled images into the trained model to generate predictions

- Human annotators review and adjust the predicted bounding boxes

- Retrain the model with the corrected annotations

- Iterate until performance metrics reached acceptable thresholds

This approach allowed efficient scaling of training data quality without requiring complete manual annotation of every image.

Multi-Shot KNN System

Acquiring images one at a time requires minutes to reposition the camera between shots. I utilized Latitude's multi-shot beam scattering capability with K-nearest neighbors using distance-based features between targets to identify optimal groupings. The algorithm grouped targets by decreasing group sizes (9→7→5→3→single shot) to maximize use of beam splitting and minimize camera repositioning. Result: up to 9X speed improvement.

Metadata Database Design

Designed and implemented a PostgreSQL database to track image metadata, grid locations, and microscope settings. The database integrated with the data collection workflow, enabling researchers to access this information for subsequent analysis.

Deployment Challenges

Model deployment required manual installation at each microscope site due to security constraints. SerialEM's interface required a manual deployment process, while the Latitude plugin needed a separate on-site PC.

Team Leadership and Strategic Contributions

I managed a team of 3 direct reports, reported directly to the CTO, and contributed to strategic initiatives including patent applications and technical presentations delivered by the CTO.

Development Environment

- Deep Learning: PyTorch, TorchVision, EfficientNet (Transfer Learning)

- Data Augmentation: Albumentations

- Image Processing: Python, NumPy, scikit-image

- Machine Learning: scikit-learn (K-Nearest Neighbors)

- Visualization: Matplotlib

- Annotation Tools: Voxel51, Label Studio

- Database: PostgreSQL, PGAdmin, DBeaver

- Scientific Software: EMAN2, IMOD, SerialEM, MRCFile

- Development Tools: Jupyter Lab, Visual Studio Code